My research is in Human-Computer Interaction with a focus on collaborative and accessible AI agents. I explore ways to make agents robustly perceive multimodal context, collaborate with users proactively to preserve user agency, and adapt continually to support creative work in design, education, and beyond.

I am currently a Ph.D. student at CMU HCI Institute, advised by Prof. Jeff Bigham and Prof. Amy Pavel. I have also worked closely with Prof. Jason Wu during my time at CMU

For more details, check out my: CV | Google Scholar | Linkedin

People

Over the past several years, I have built long-term collaborations with early-career researchers who do exciting work in HCI, software engineering, and machine learning. A few are listed below:

-

Peya Mowar (2023~now): M.S. at CMU RI (Next: Ph.D. student at CMU RI) [Tab to Autocomplete | CodeA11y

-

Amanda Li (2023~now): B.S. and M.S. at CMU CS (Next: Ph.D. student at Stanford CS) [DreamStruct | UIClip | WaybackUI

-

Eryn Ma (2025~now): Ph.D. student at CMU S3D

Preprints

-

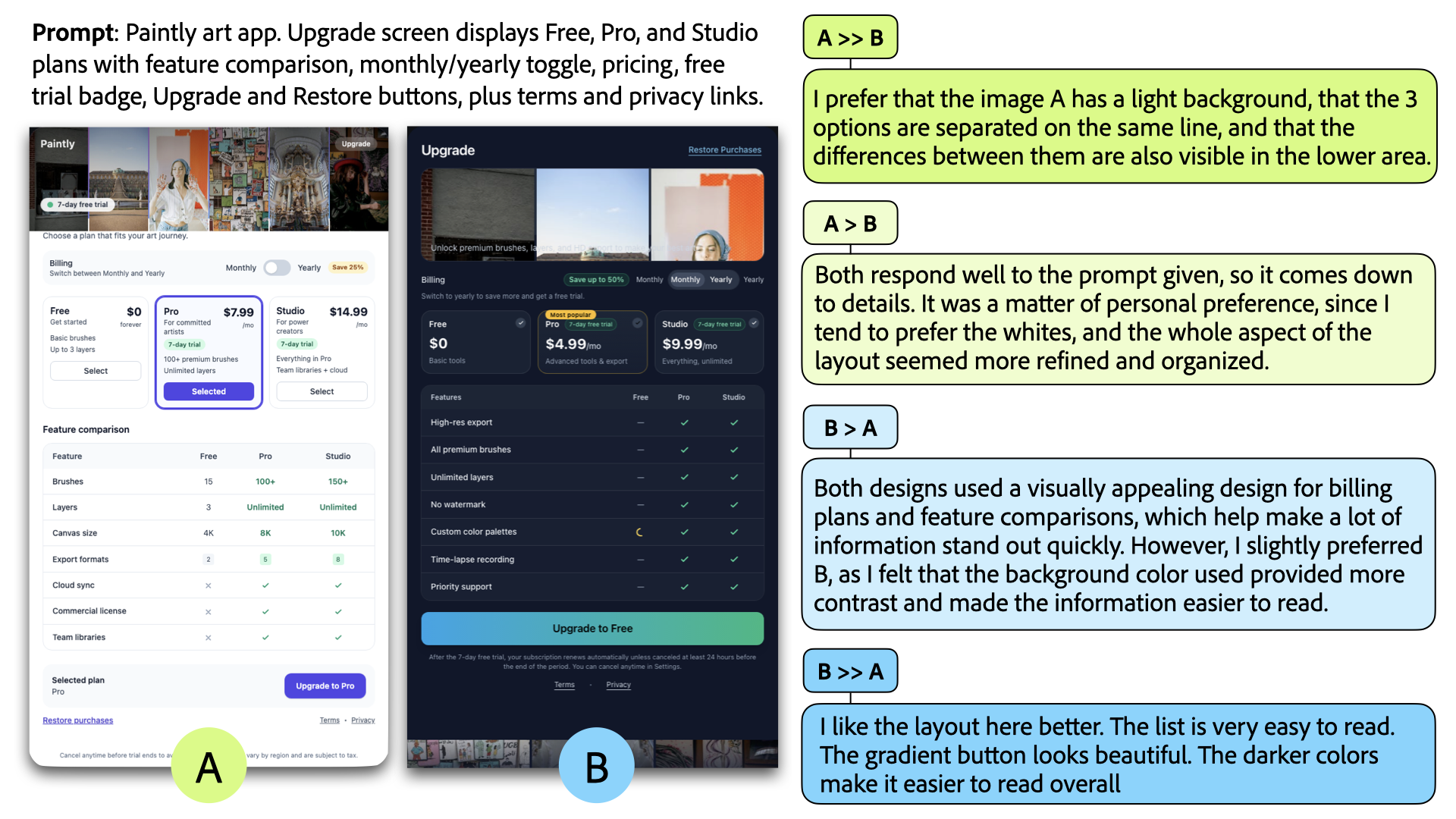

DesignPref: Capturing Personal Preferences in Visual Design Generation Yi-Hao Peng, Jeff Bigham, Jason Wu arXiv.2511.20513 project website | paper

-

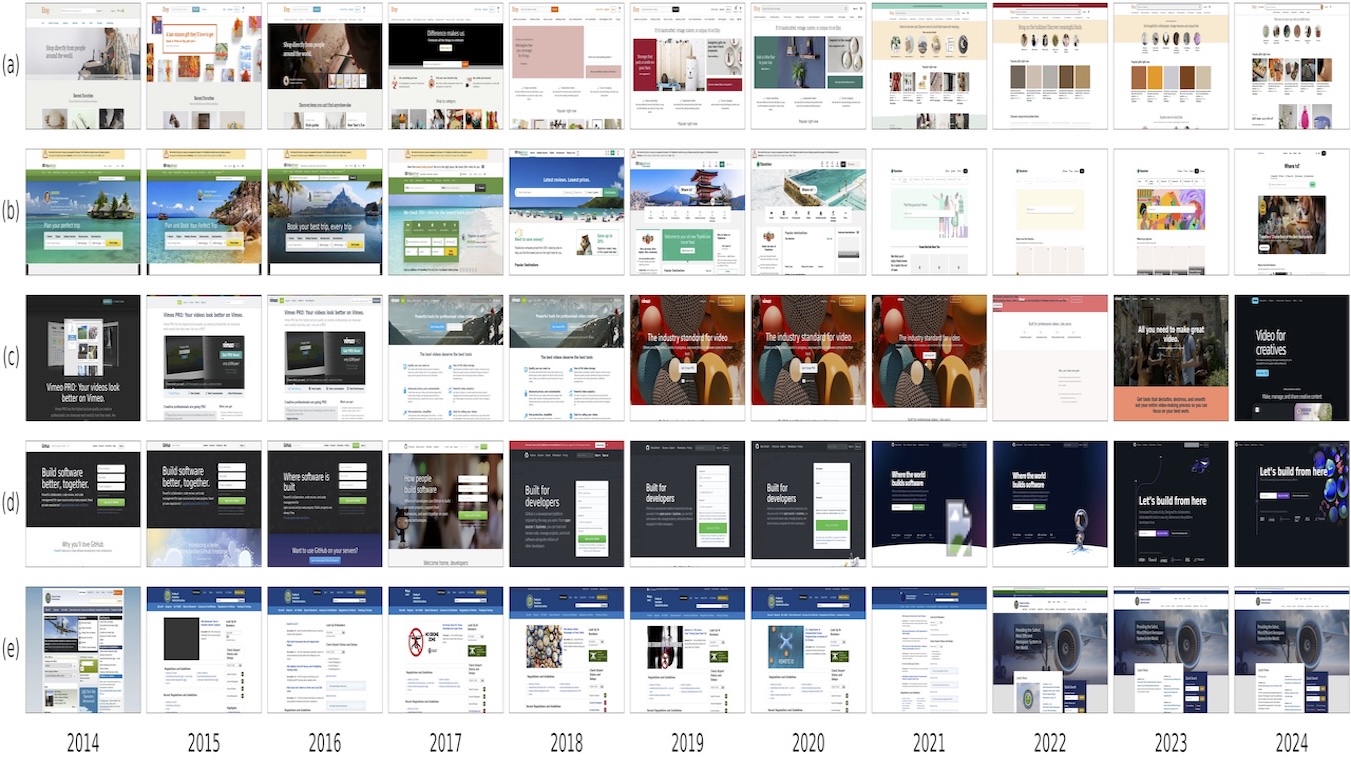

WaybackUI: A Dataset to Support the Longitudinal Analysis of Web User Interfaces Amanda Li, Yi-Hao Peng, Jeff Nichols, Jeff Bigham, Jason Wu arXiv.2512 project website | paper

-

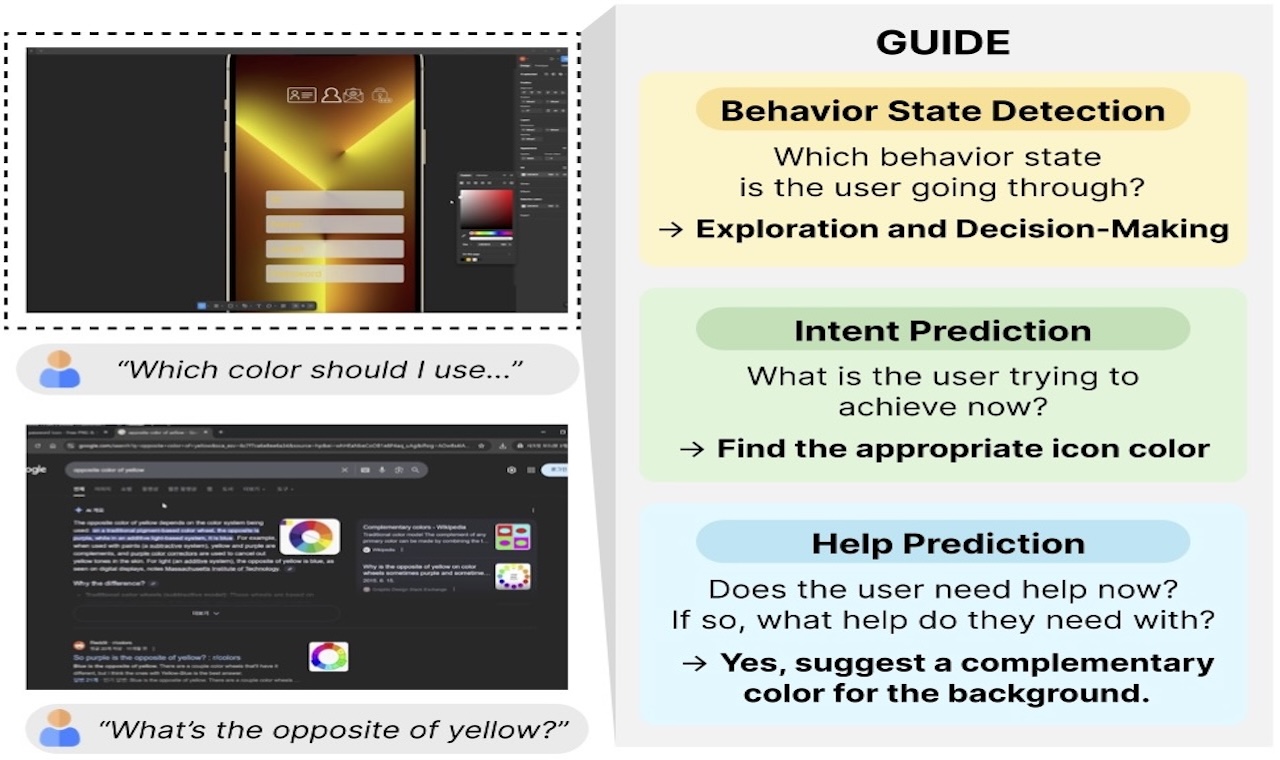

GUIDE: A Benchmark for Understanding and Assisting Users in Open-Ended GUI Tasks Saelyne Yang, Jaesang Yu, Yi-Hao Peng, Kevin Qinghong Lin, Jae Won Cho, Yale Song, Juho Kim arXiv.2512 project website | paper

-

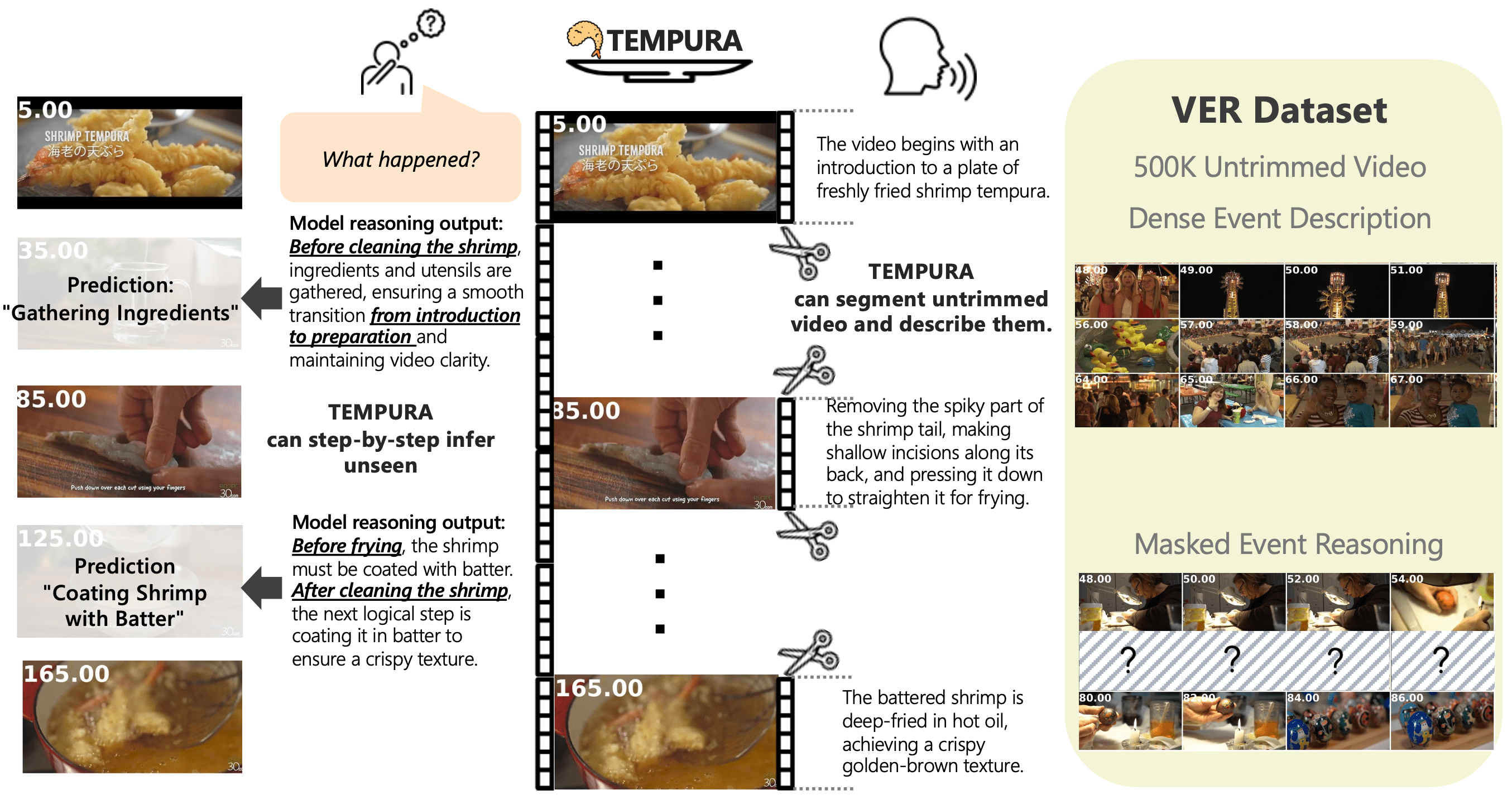

Tempura: Temporal Event Masked Prediction and Understanding for Reasoning in Action Jen-Hao Cheng, Huapeng Zhou, Vivian Wang, Yi-Hao Peng, Hsiang-Wei Huang, Wenhao Chai, Huayu Wang, Hou-I Liu, Kuang-Ming Chen, Cheng-Yen Yang, Yipeng Wang, Yi-Ling Chen, Vibhav Vineet, Qin Cai, Jenq-Neng Hwang arXiv.2505.01583 project website | paper

Publications

-

Morae: Proactively Pausing UI Agents for User Choices Yi-Hao Peng, Dingzeyu Li, Jeff Bigham, Amy Pavel ACM Symposium on User Interface Software and Technology (UIST 2025) project website | paper

-

StepWrite: Adaptive Planning for Speech-Driven Text Generation Hamza El Alaoui, Atieh Taheri, Yi-Hao Peng, Jeff Bigham ACM Symposium on User Interface Software and Technology (UIST 2025) project website | paper

-

CodeA11y: Making AI Coding Assistants Useful for Accessible Web Development Peya Mowar, Yi-Hao Peng, Jason Wu, Aaron Steinfeld, Jeff Bigham ACM Conference on Human Factors in Computing Systems (CHI 2025) project website | paper

-

Towards Bidirectional Human-AI Alignment: A Systematic Review for Clarifications, Framework, and Future Directions Hua Shen, Tiffany Knearem, Reshmi Ghosh, Kenan Alkiek*, Kundan Krishna*, Yachuan Liu*, Ziqiao Ma*, Savvas Petridis*, Yi-Hao Peng*, Qiwei Li*, Sushrita Rakshit*, Chenglei Si*, Yutong Xie*, Jeff Bigham, Frank Bentley, Joyce Chai, Zachary Lipton, Qiaozhu Mei, Rada Mihalcea, Michael Terry, Diyi Yang, Meredith Ringel Morris, Paul Resnick, David Jurgens Advances in Neural Information Processing Systems (NeurIPS 2025), Position Paper Track [Top 6%] project website | paper

-

AutoPresent: Designing Structured Visuals From Scratch Jiaxin Ge*, Zora Wang*, Xuhui Zhou, Yi-Hao Peng, Sanjay Subramanian, Qinyue Tan, Maarten Sap, Alane Suhr, Daniel Fried, Graham Neubig, Trevor Darrell IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2025) project website | paper

-

DreamStruct: Understanding Slides and User Interfaces via Synthetic Data Generation Yi-Hao Peng, Faria Huq, Yue Jiang, Jason Wu, Amanda Li, Jeff Bigham, Amy Pavel European Conference on Computer Vision (ECCV 2024) project website | paper

-

UIClip: A Data-driven Model for Assessing User Interface Design Jason Wu, Yi-Hao Peng, Amanda Li, Amanda Swearngin, Jeff Bigham, Jeff Nichols ACM Symposium on User Interface Software and Technology (UIST 2024) project website | paper | video preview

-

Long-form Answers to Visual Questions Asked by Blind and Low Vision People Mina Huh, Fangyuan Xu, Yi-Hao Peng, Chongyan Chen, Hansika Murugu, Danna Gurari, Eunsol Choi, Amy Pavel Conference on Language Modeling (COLM 2024) [Oral, Top 2%] project website | paper | video preview

-

AdvCAPTCHA: Creating Usable and Secure Audio CAPTCHA with Adversarial Machine Learning Hao-Ping (Hank) Lee, Wei-Lun Kao, Hung-Jui Wang, Ruei-Che Chang, Yi-Hao Peng, Fu-Yin Cherng, Shang-Tse Chen NDSS Symposium on Usable Security and Privacy (USEC 2024) paper | video preview

-

Designing a conversational telepresence robot for homebound older adults Yaxin Hu, Laura Stegner, Yasmine Kotturi, Caroline Zhang, Yi-Hao Peng, Faria Huq, Yuhang Zhao, Jeff Bigham, Bilge Mutlu ACM Conference on Designing Interactive Systems (DIS 2024) paper | video preview

-

GenAssist: Making Image Generation Accessible Mina Huh, Yi-Hao Peng, Amy Pavel ACM Symposium on User Interface Software and Technology (UIST 2023) [Best Paper Award, Top 1%] project website | paper | video preview

-

Slide Gestalt: Automatic Structure Extraction in Slide Decks for Non-Visual Access Yi-Hao Peng, Peggy Chi, Anjuli Kannan, Meredith Ringel Morris, Irfan Essa ACM Conference on Human Factors in Computing Systems (CHI 2023) paper | video preview

-

WebUI: A Dataset for Enhancing Visual UI Understanding with Web Semantics Jason Wu, Siyan Wang, Siman Shen, Yi-Hao Peng, Jeff Nichols, Jeff Bigham ACM Conference on Human Factors in Computing Systems (CHI 2023) [Best Paper Nominee, Top 5%] paper | video preview

-

Reality Rifts: Wonder-ful Interfaces by Disrupting Perceptual Causality Lung-Pan Cheng, Yi Chen, Yi-Hao Peng, Christian Holtz ACM Conference on Human Factors in Computing Systems (CHI 2023) paper | video preview

-

AVScript: Accessible Video Editing with Audio-Visual Scripts Mina Huh, Saelyne Yang, Yi-Hao Peng, Xiang Anthony Chen, Young-Ho Kim, Amy Pavel ACM Conference on Human Factors in Computing Systems (CHI 2023) paper | video preview

-

Diffscriber: Describing Visual Design Changes to Support Mixed-Ability Presentation Authoring Yi-Hao Peng, Jason Wu, Jeff Bigham, Amy Pavel ACM Symposium on User Interface Software and Technology (UIST 2022) project website | paper | video preview

-

ImageExplorer: Multi-Layered Touch Exploration to Encourage Skepticism for Imperfect AI-Generated Image Captions Jaewook Lee, Jaylin Herskovitz, Yi-Hao Peng, Anhong Guo ACM Conference on Human Factors in Computing Systems (CHI 2022) paper | video preview | talk

-

Slidecho: Flexible Non-Visual Exploration of Presentation Videos Yi-Hao Peng, Jeff Bigham, Amy Pavel ACM Conference on Computers and Accessibility (ASSETS 2021) project website | paper | talk

-

Say It All: Feedback for Improving Non-Visual Presentation Accessibility Yi-Hao Peng, JiWoong Jang, Jeff Bigham, Amy Pavel ACM Conference on Human Factors in Computing Systems (CHI 2021) project website | paper | video preview | talk | slides

-

StrengthGaming: Enabling Dynamic Repetition Tempo in Strength Training-based Exergame Design Sih-Pin Lai, Cheng-An Hsieh, Yu-Hsin Lin, Teepob Harutaipree, Shih-Chin Lin, Yi-Hao Peng, Lung-Pan Cheng, Mike Y. Chen ACM Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI 2020) paper | video preview | talk | demo recording

-

WalkingVibe: Reducing Virtual Reality Sickness and Improving Realism while Walking in VR using Unobtrusive Head-mounted Vibrotactile Feedback Yi-Hao Peng, Carolyn Yu, Shi-Hong Liu, Chung-Wei Wang, Paul Taele, Neng-Hao Yu, Mike Y. Chen ACM Conference on Human Factors in Computing Systems (CHI 2020) paper | video preview | talk

-

PersonalTouch: Improving Touchscreen Usability by Personalizing Accessibility Settings based on Individual User’s Touchscreen Interaction Yi-Hao Peng, Muh-Tarng Lin, Yi Chen, Tzu-Chuan Chen, Pin-Sung Ku, Paul Taele, Mike Y. Chen ACM Conference on Human Factors in Computing Systems (CHI 2019) paper | Apple WWDC 2019 Highlight: Developer Toolkit (ResearchKit) Integration (02:57)

-

PhantomLegs: Reducing Virtual Reality Sickness using Head-Worn Haptic Devices Shi-Hong Liu, Neng-Hao Yu, Liwei Chan, Yi-Hao Peng, Wei-Zen Sun, Mike Y. Chen IEEE Conference on Virtual Reality and 3D User Interfaces (VR 2019) paper | video preview | talk

-

PeriText: Utilizing Peripheral Vision for Reading Text on Augmented Reality Smart Glasses Pin-Sung Ku, Yu-Chih Lin, Yi-Hao Peng, Mike Y. Chen IEEE Conference on Virtual Reality and 3D User Interfaces (VR 2019) paper | video preview | talk

-

SpeechBubbles: Enhancing Captioning Experiences for Deaf and Hard-of-Hearing People in Group Conversations Yi-Hao Peng, Ming-Wei Hsu, Paul Taele, Ting-Yu Lin, Po-En Lai, Leon Hsu, Tzu-Chuan Chen, Te-Yen Wu, Yu-An Chen, Hsien-Hui Tang, Mike Y. Chen ACM Conference on Human Factors in Computing Systems (CHI 2018) paper | video preview | talk

Press Coverage

-

Researchers Say Head-mounted Haptics Can Combat Smooth Locomotion Discomfort in VR (covered Project WalkingVibe), Road to VR, May, 2020

-

ResearchKit and CareKit Reimagined (covered Project PersonalTouch), Apple Worldwide Developers Conference — session 217 (02:57), June, 2019

-

The Radical Frontier of Inclusive Design (covered Project SpeechBubbles), Fast Company, May, 2018